Hi, Miles!

Thanks for answer so soon and sorry for said a lot of strange things, may be I can explain better what I have seen in my simulation.

I'm trying to reproduce all results of the following paper of S. R. Manmana et. al "https://journals.aps.org/prb/abstract/10.1103/PhysRevB.70.155115" about the Ionic Hubbard Model. In particular, I want to calculate the finite-size scaling behavior of spin gap for this model.

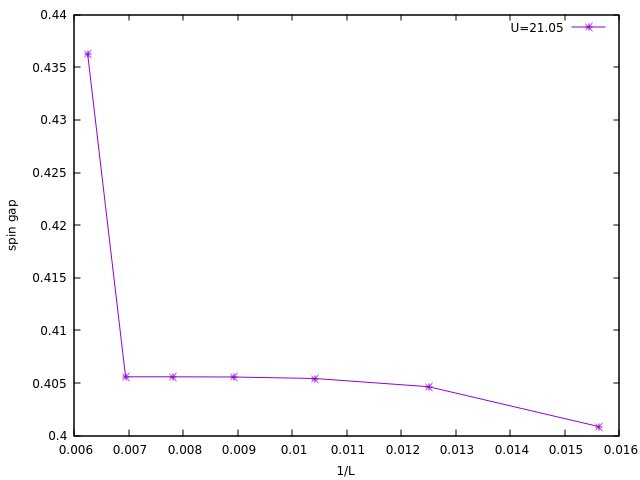

I have perfomed several calculations in the region with delta=20.0 and U=21.0 to U=22.0 for a lattice length from L=64 up to L=512. In all most values of this parameter region I have a good finite-size scaling of the gap as it is reported by Manmana, but fot values near to U=21.0 or less than this value, the spin gap suddenly jump for lattices with L>128. This is a figure of this behaviour.

I did check the information of DMRG steps by locking for some problem and I found out that the number of states were very small (from 64 to 100 states) with respect to the same calculation for a higher value of U (U=21.45 where at least 400 states are required for the same lattice length). Due to this problem I increase the number of states at least to 800 states, the maximum value considerer by Manmana, but I obtained the same result of the energy.

I'm trying to understand what is happening in this system and the only thing that could explain this behaviour is may be an accumulation of floating point roundoff error as you well interpreted from my first question. The strangest thing is that I have observed this behavior not only using tensor networks, but also in other Fortran code without mps.

This are the DMRG parameters for this simulations

maxdim mindim cutoff niter noise

100 1 1E-5 6 1E-5

200 1 1E-6 6 1E-5

256 1 1E-7 6 1E-8

400 1 1E-8 5 1E-9

400 1 1E-8 5 1E-10

800 1 1E-9 5 1E-11

800 1 1E-9 4 1E-11

800 1 1E-9 4 1E-12